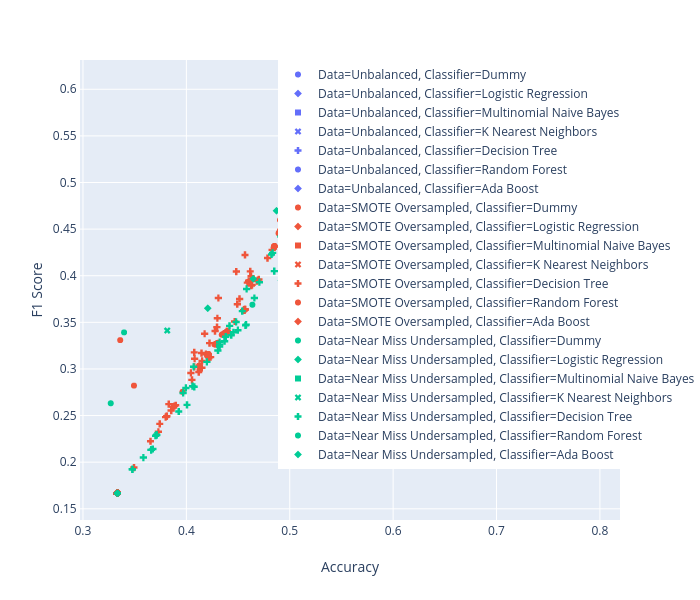

F1 Score Vs Accuracy

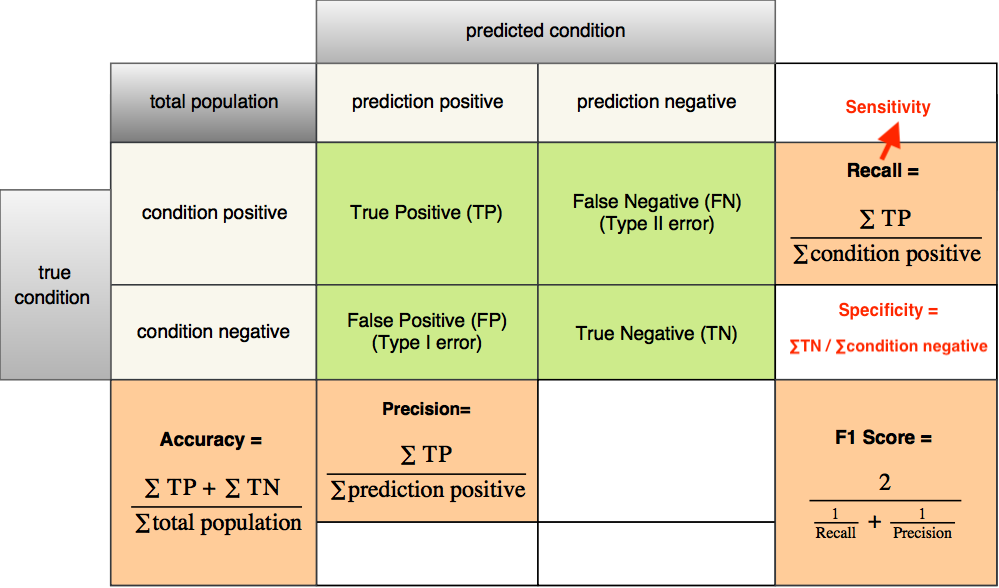

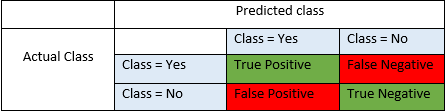

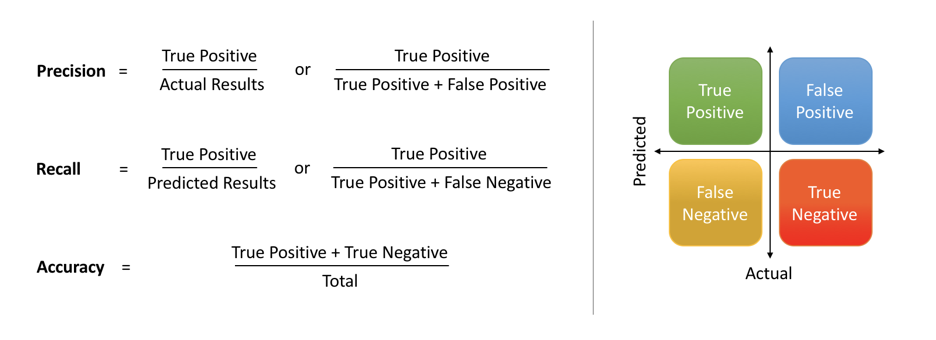

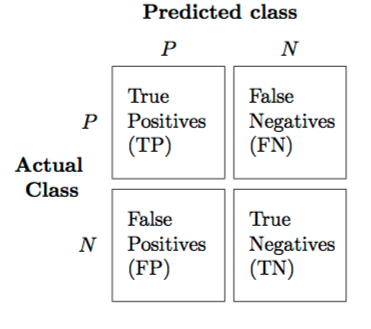

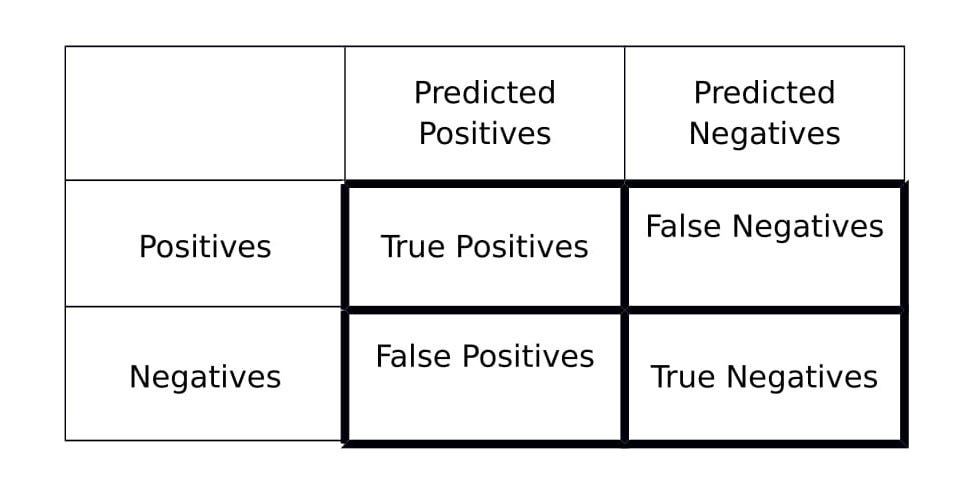

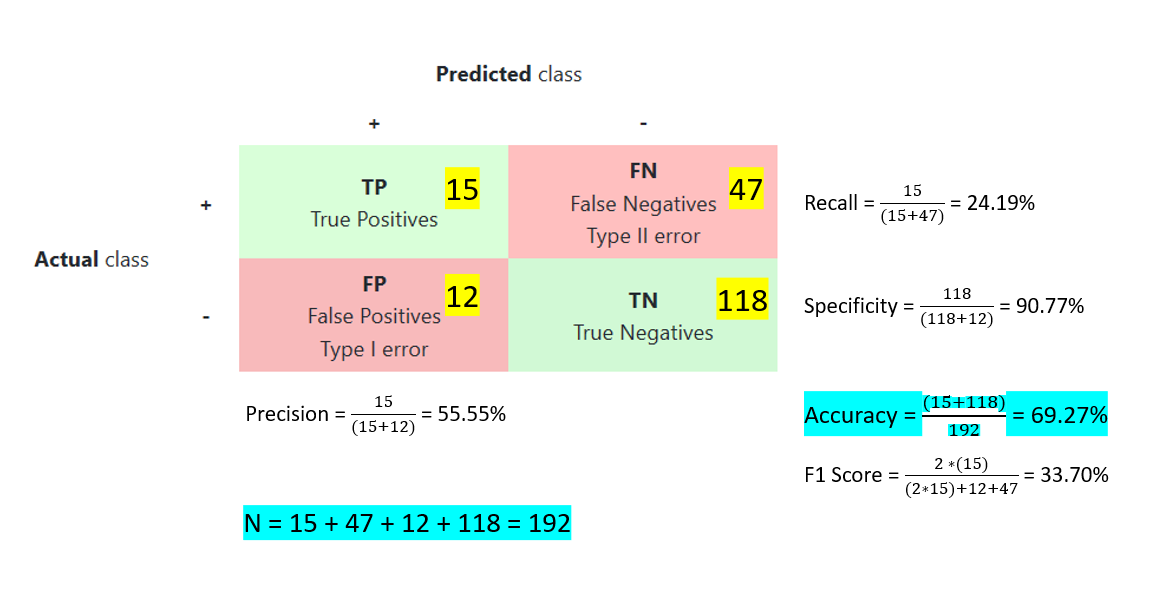

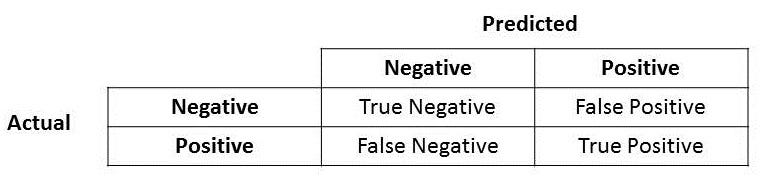

Therefore this score takes both false positives and false negatives into account.

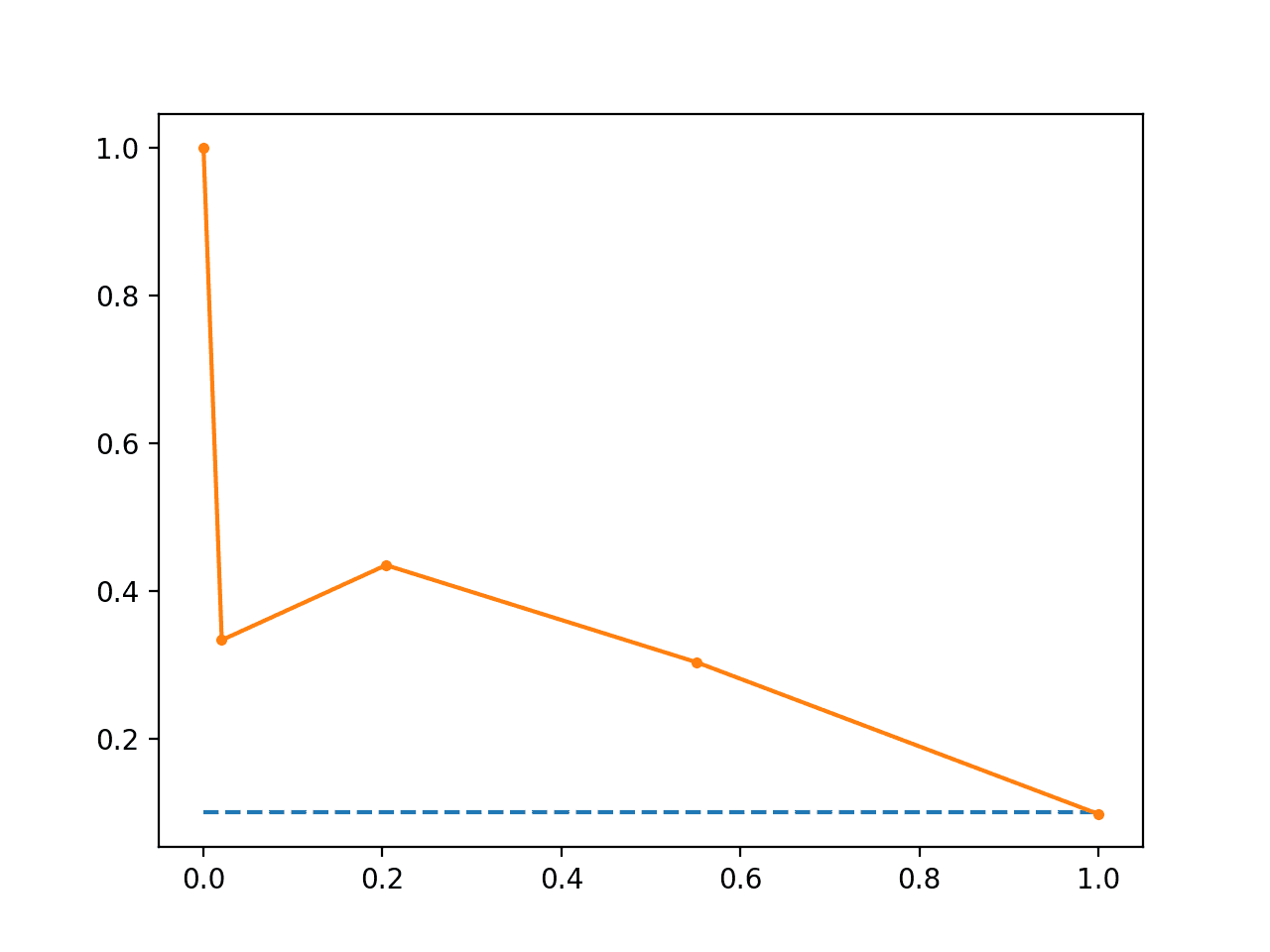

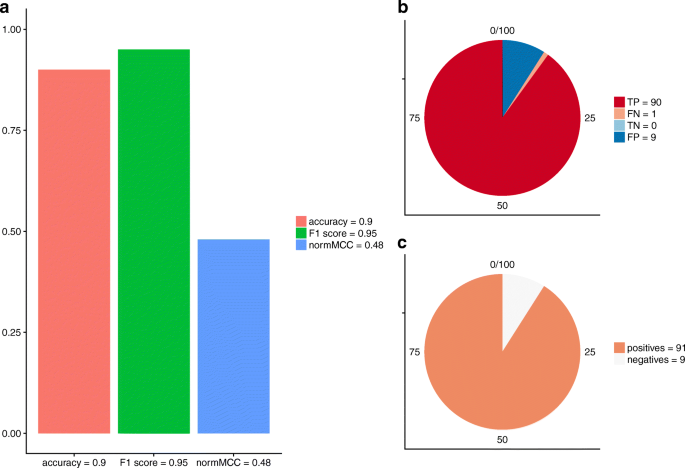

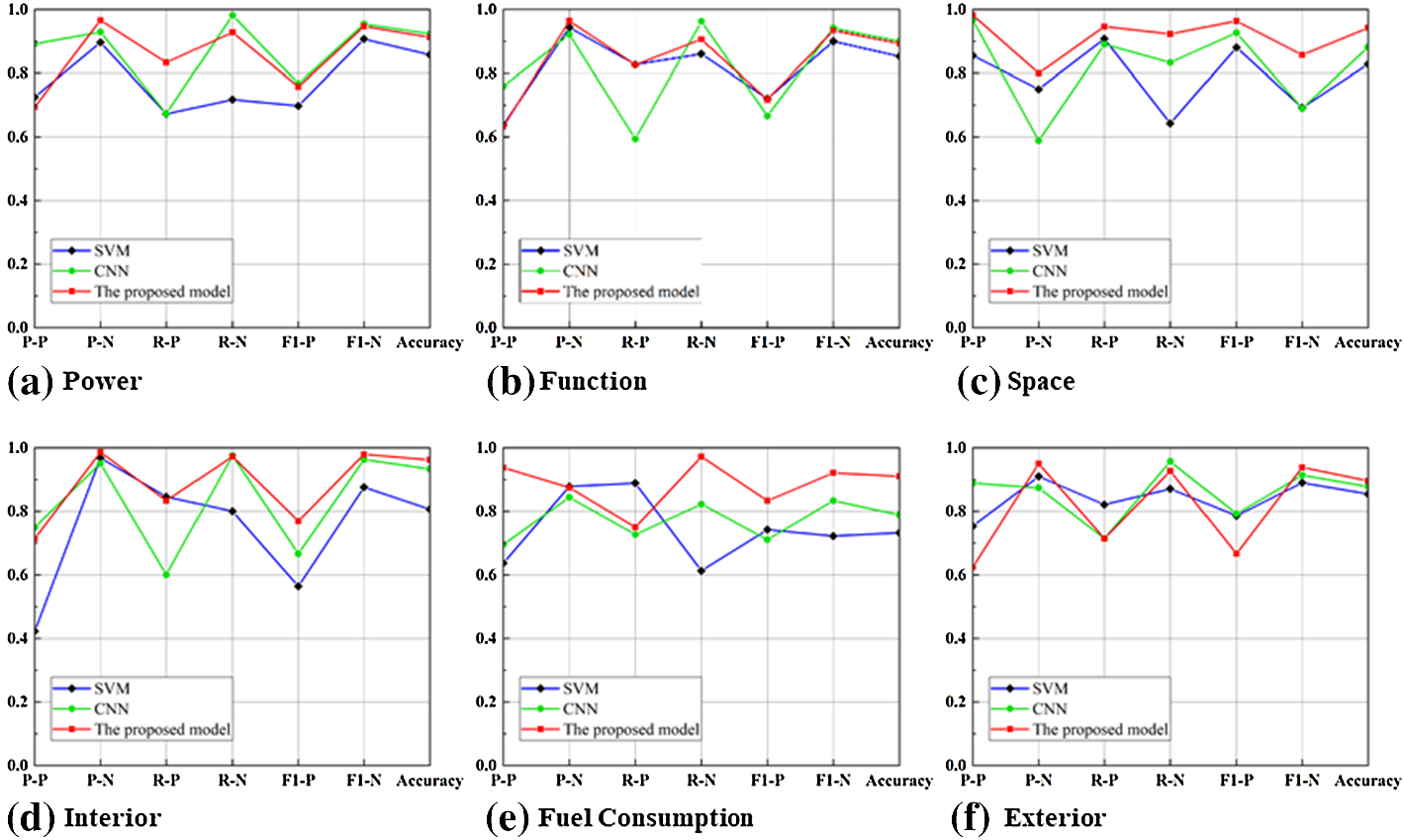

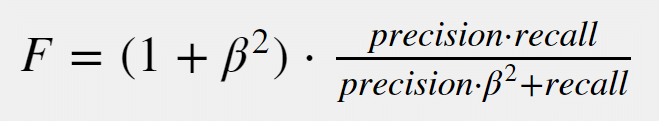

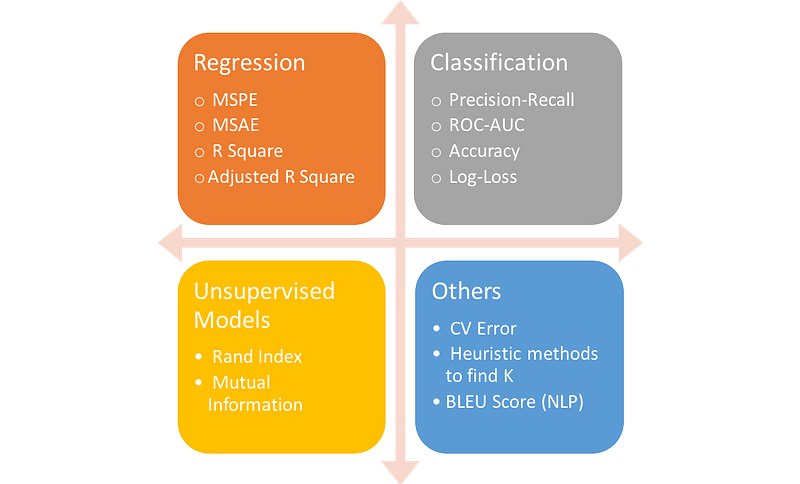

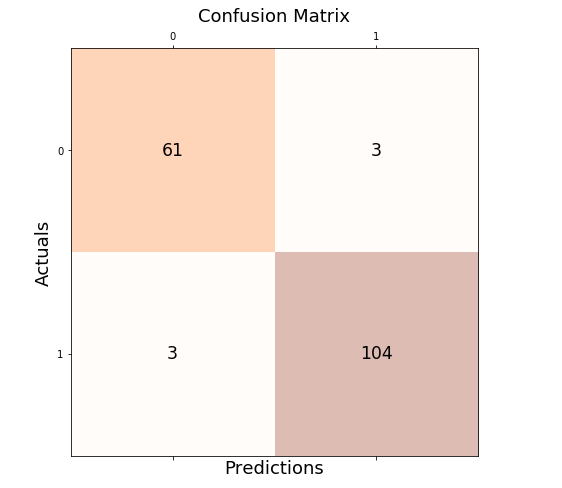

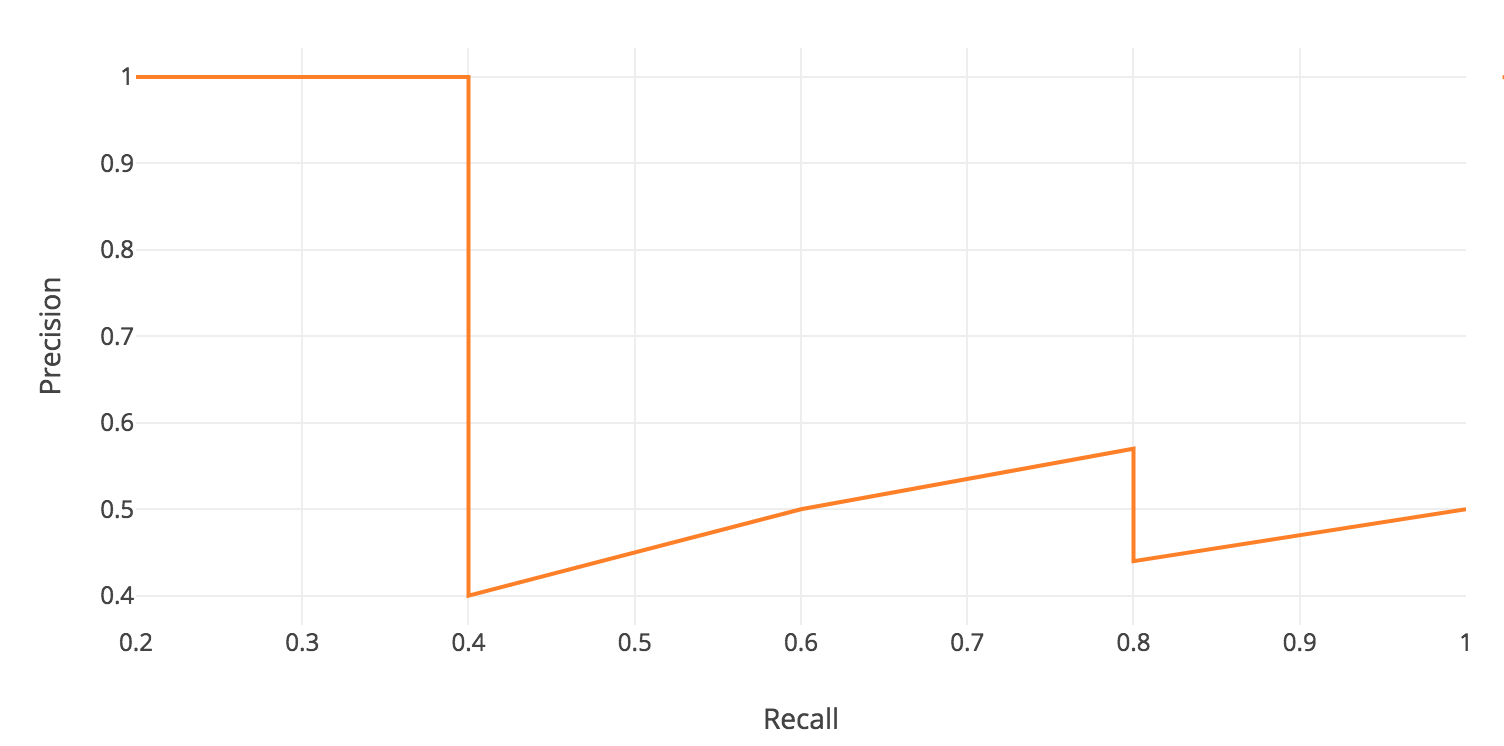

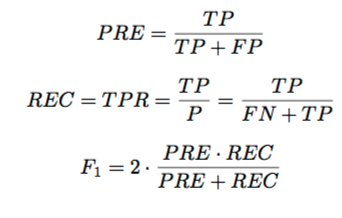

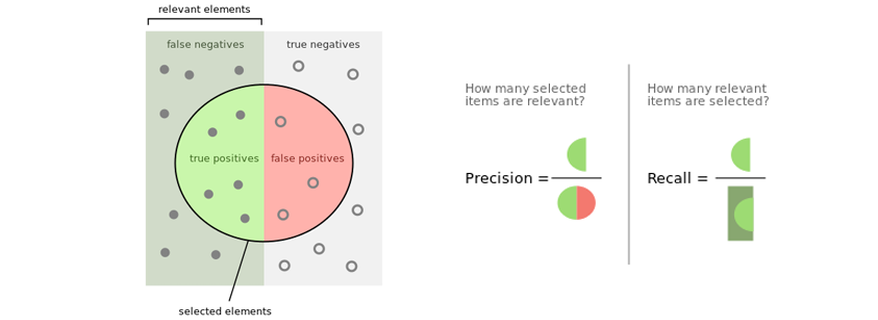

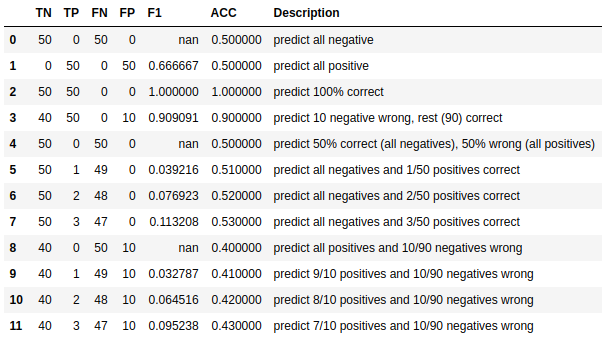

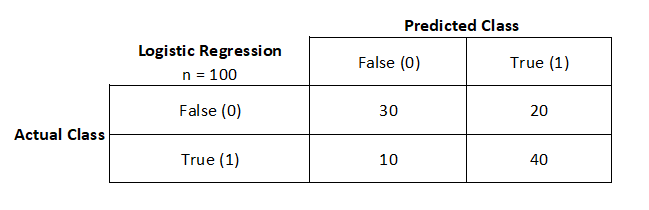

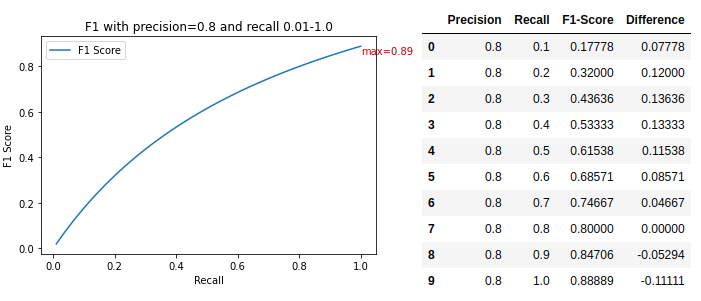

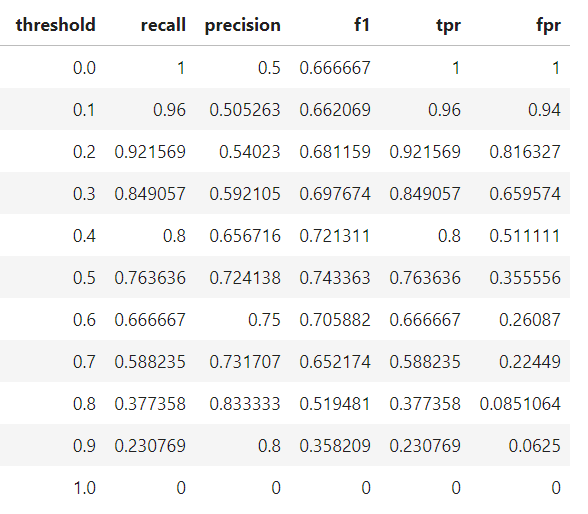

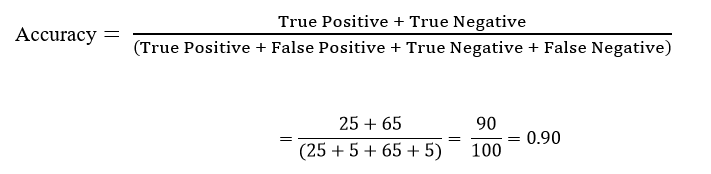

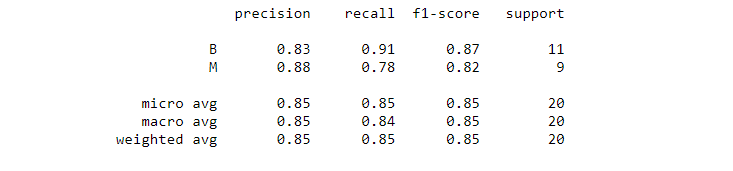

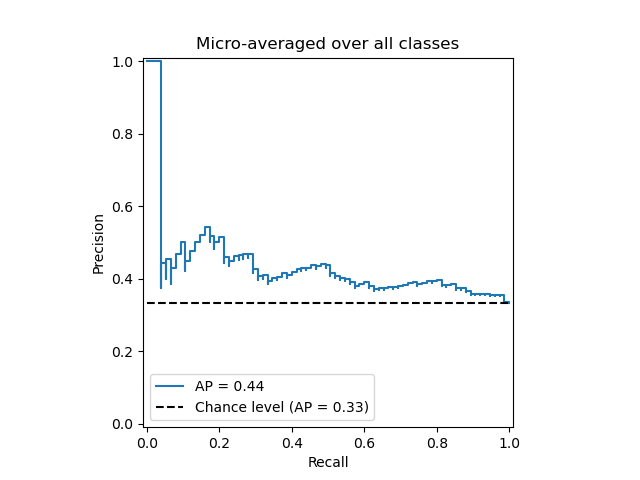

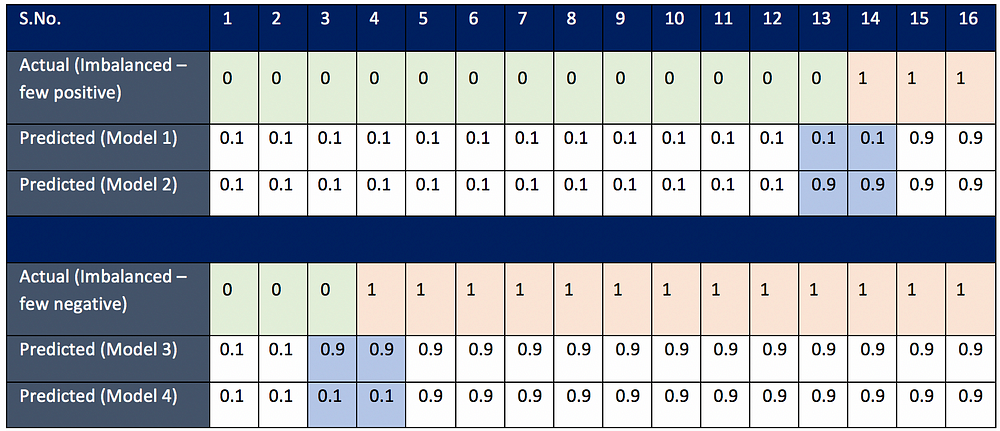

F1 score vs accuracy. If we are not sure which to focus on we can use f1 score to evaluate our model performance. Yes accuracy is a great measure but only when you have symmetric datasets false negatives false positives counts are close also false negatives false positives have similar costs. Accuracy is used when the true positives and true negatives are more important while f1 score is used when the false negatives and false positives are crucial accuracy can be used when the class. F1 score f1 score is the weighted average of precision and recall.

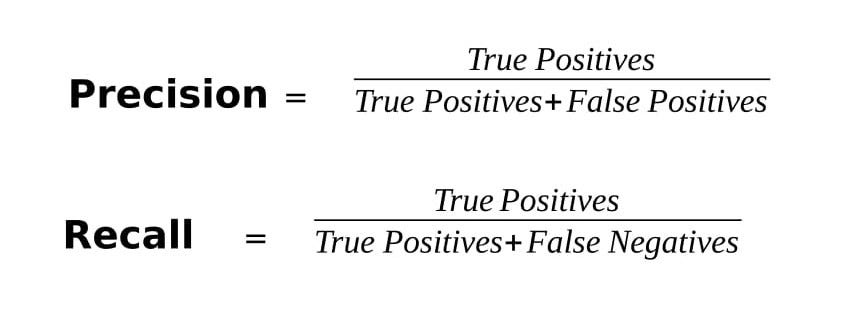

In statistical analysis of binary classification the f score or f measure is a measure of a tests accuracy. I hope this small post explains accuracy precision recall and f1 in a simple and. It is calculated from the precision and recall of the test where the precision is the number of correctly identified positive results divided by the number of all positive results including those not identified correctly and the recall is the number of correctly identified positive results divided by the number of all samples that should have been identified as positive. If the cost of false positives and false negatives are different then f1 is your savior.

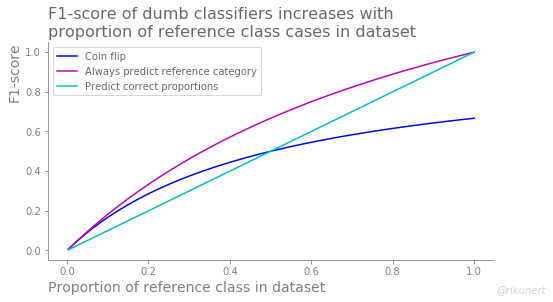

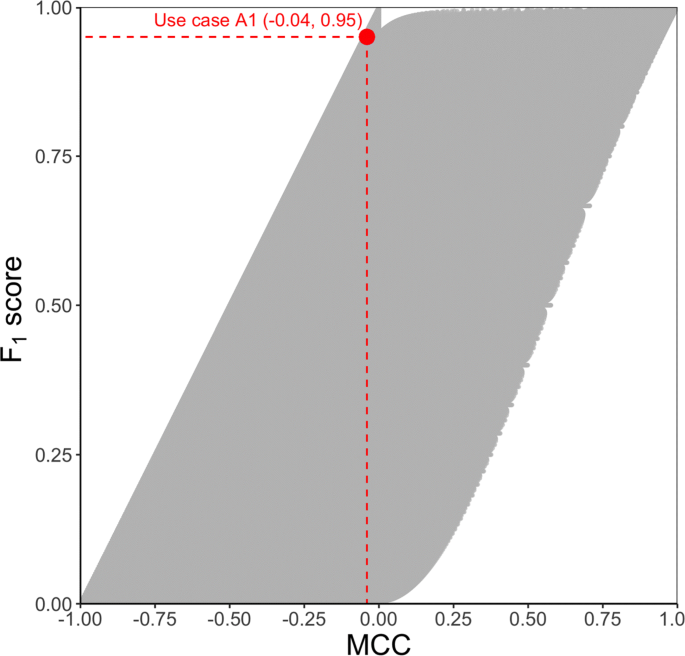

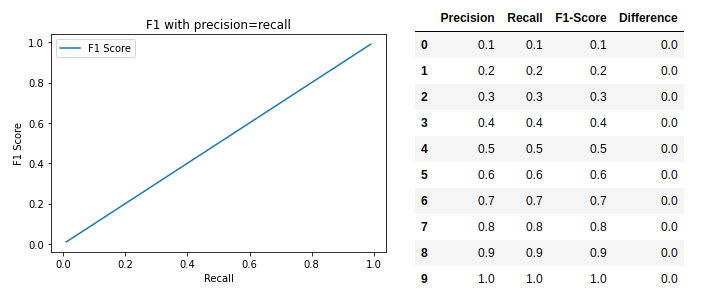

It is used to evaluate binary classification systems which classify examples into positive or negative. Intuitively it is not as easy to understand as accuracy but f1 is usually more useful than accuracy especially if you have an uneven class distribution. Remember that f1 score is balancing precision and recall on the positive class while accuracy looks at correctly classified observations both positive and negative. The reason for this is that the f1 score is independent from the true negatives while accuracy is not.

Just thinking about the theory it is impossible that accuracy and the f1 score are the very same for every single dataset. The f score is a way of combining the precision and recall of the model and it is defined as the harmonic mean of the models precision and recall. By taking a dataset where f1 acc and adding true negatives to it you get f1 acc. F1 is best if you have an uneven class distribution.