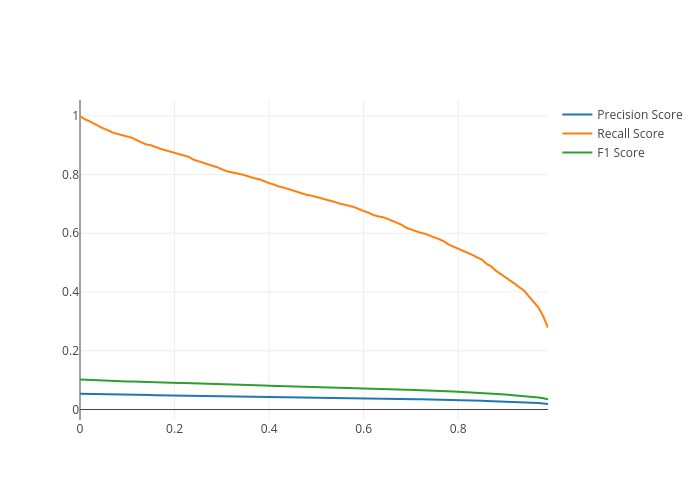

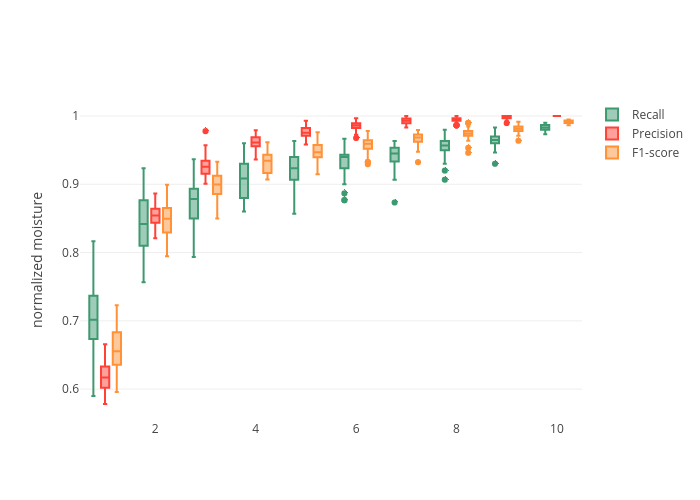

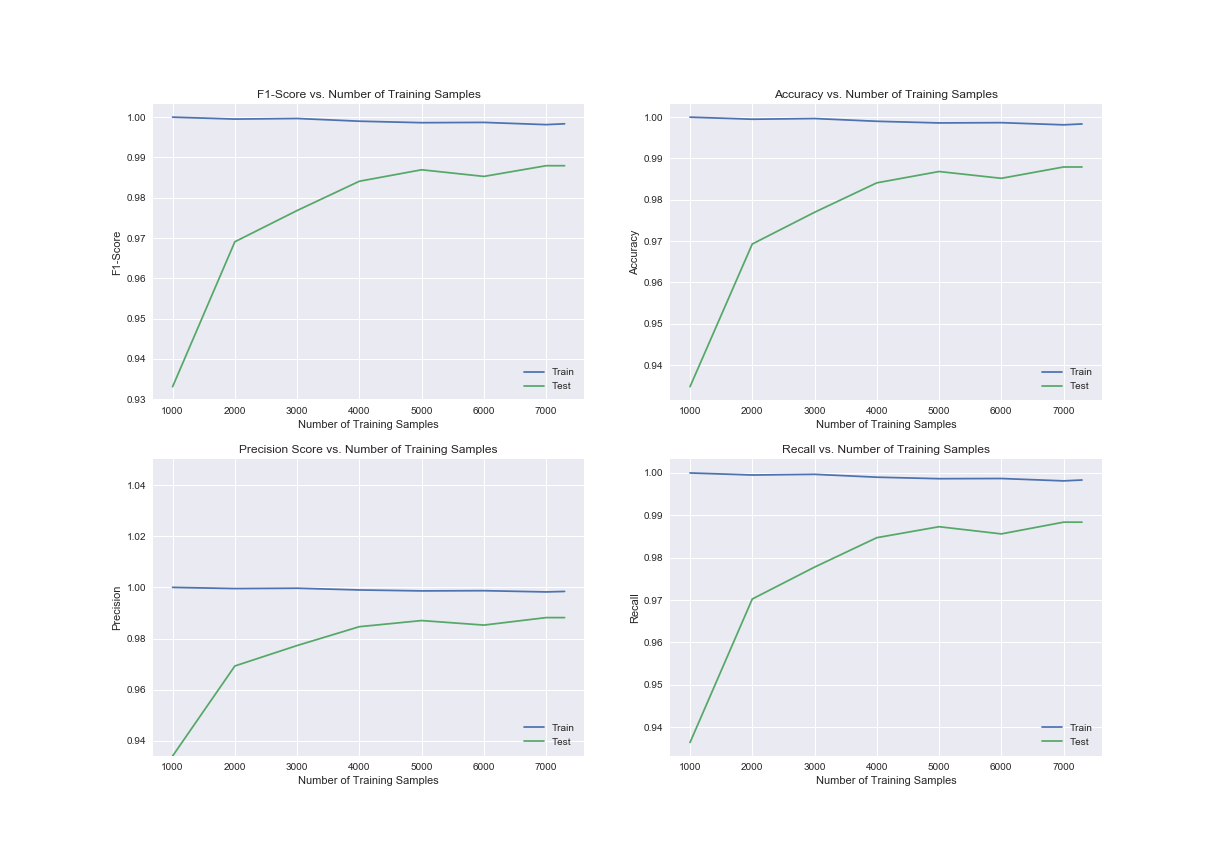

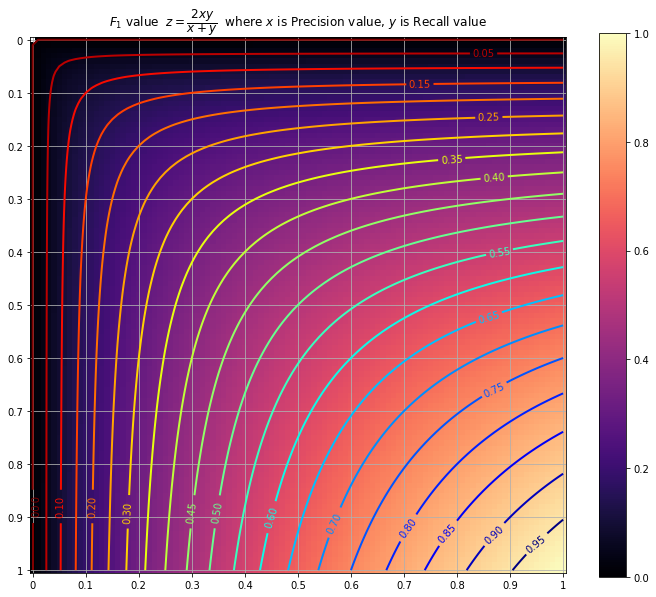

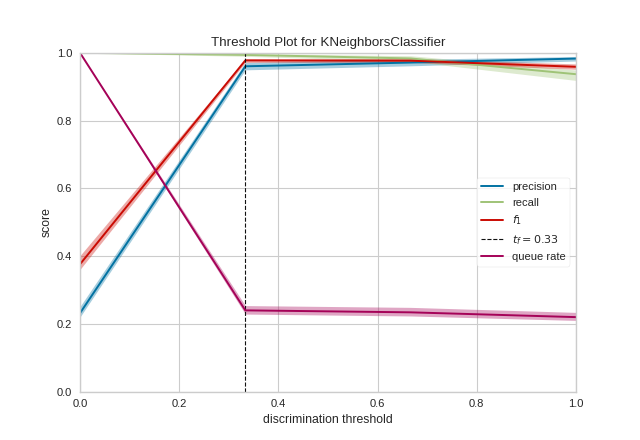

F1 Score Plot

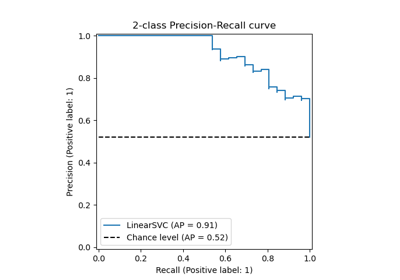

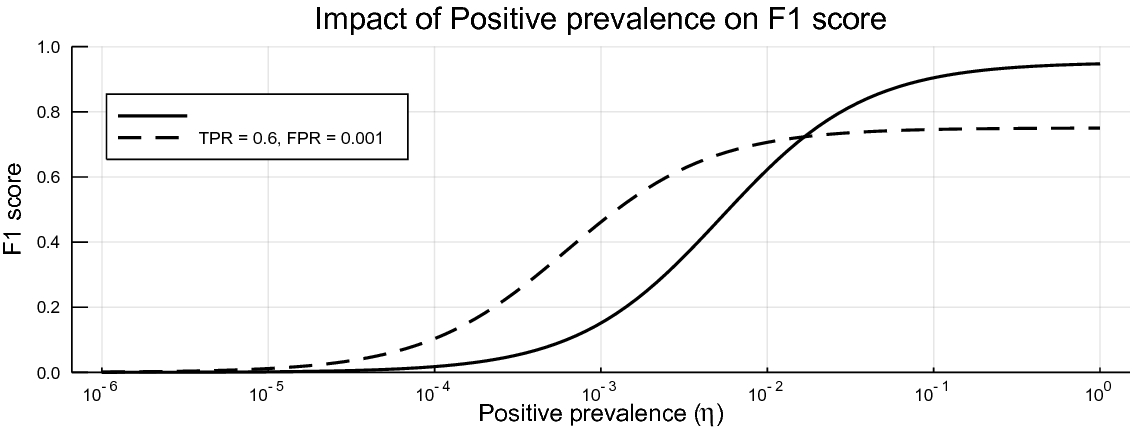

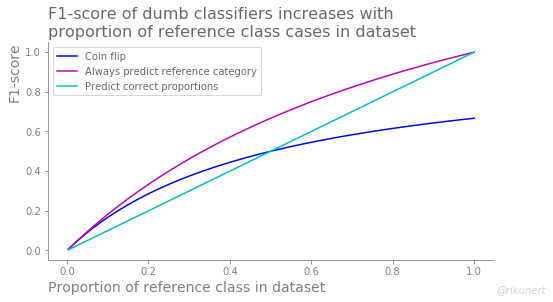

F1 2 x precision x recall precision recall.

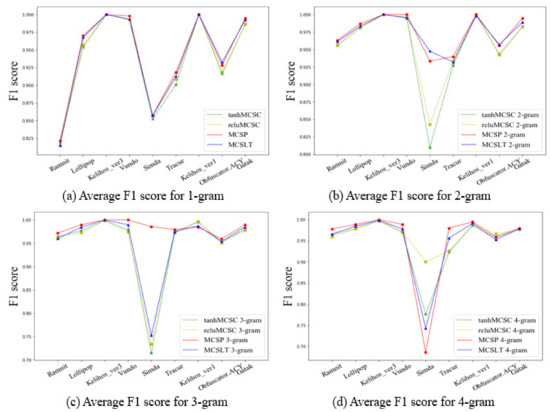

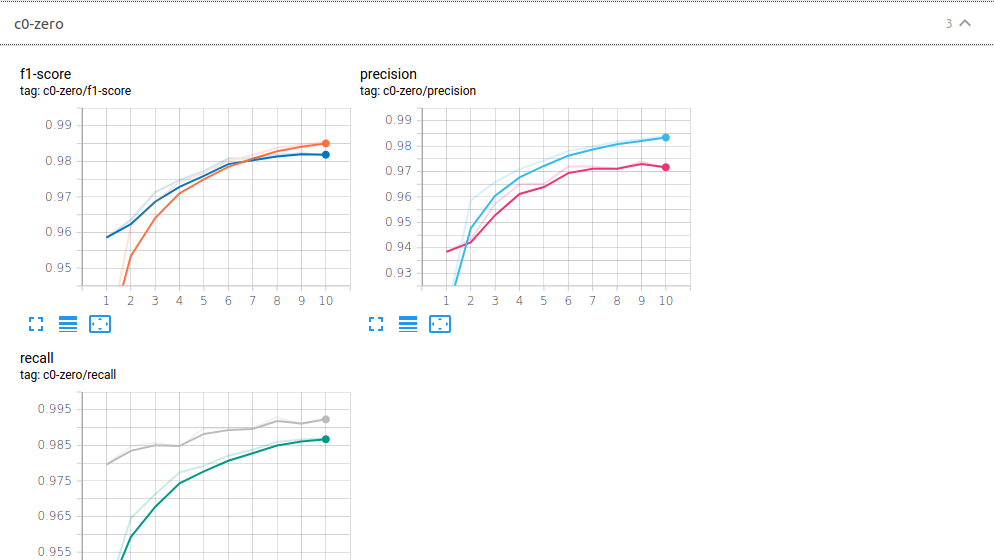

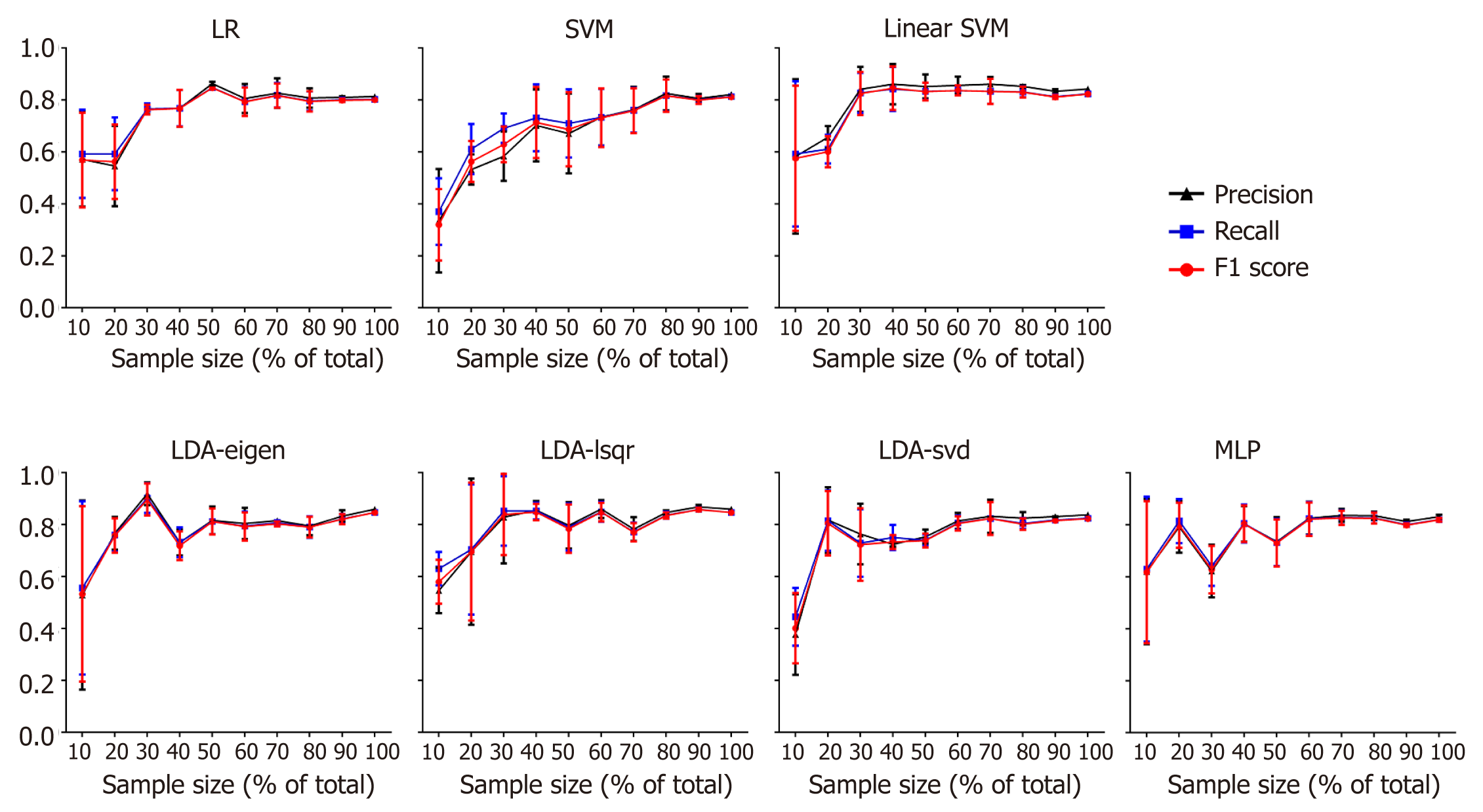

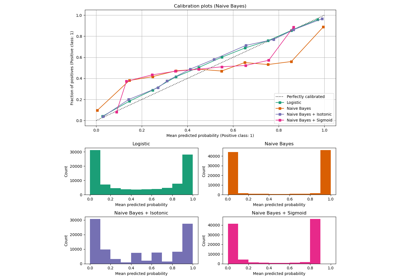

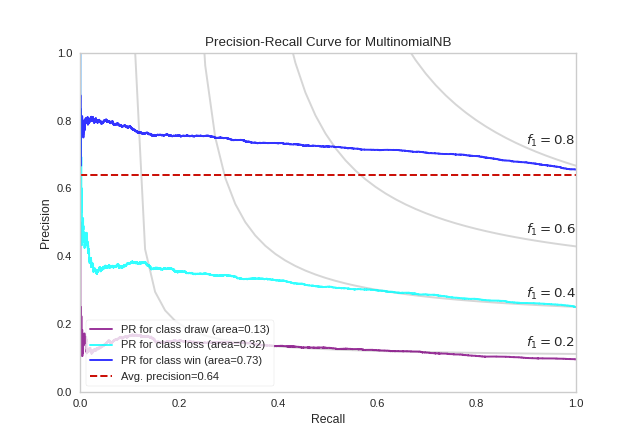

F1 score plot. What is an f1 score chart. What would you plot exactly. F1 score takes into account precision and the recall. In statistical analysis of binary classification the f score or f measure is a measure of a tests accuracy.

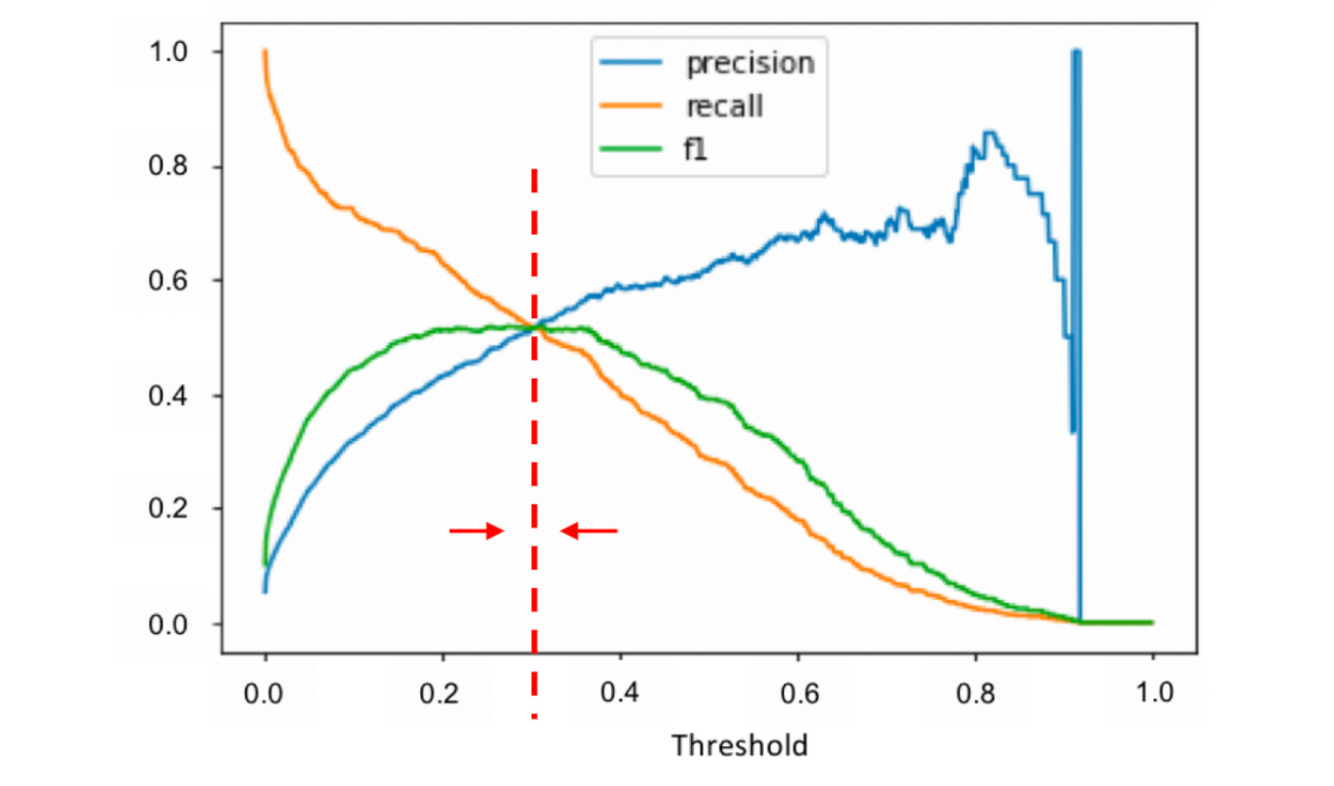

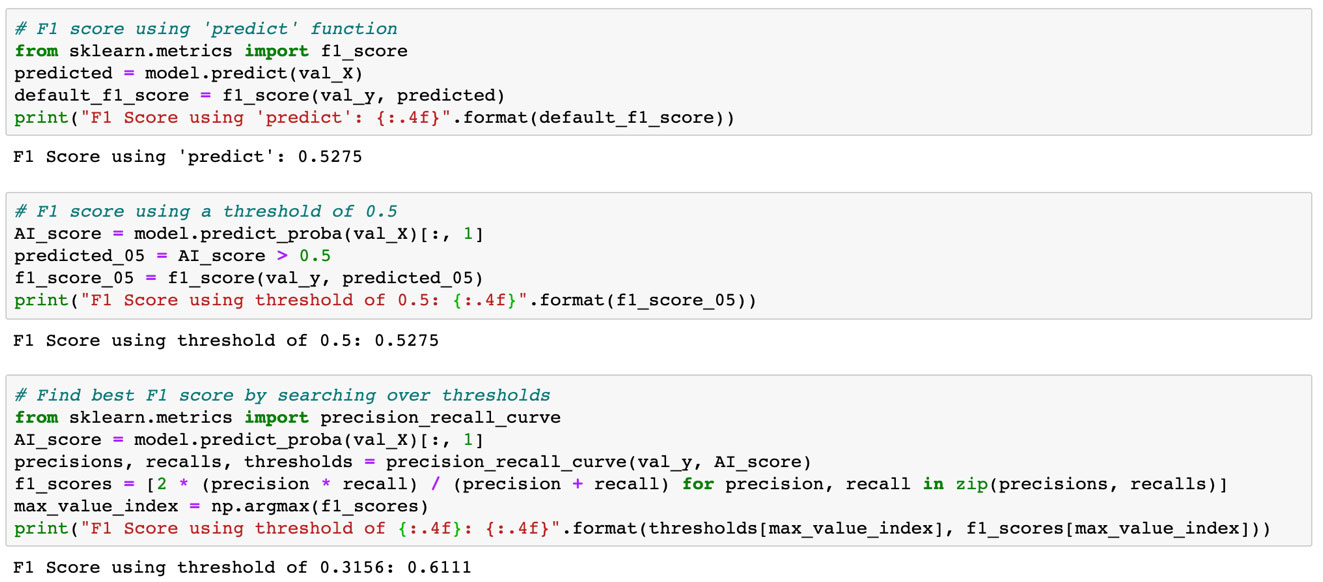

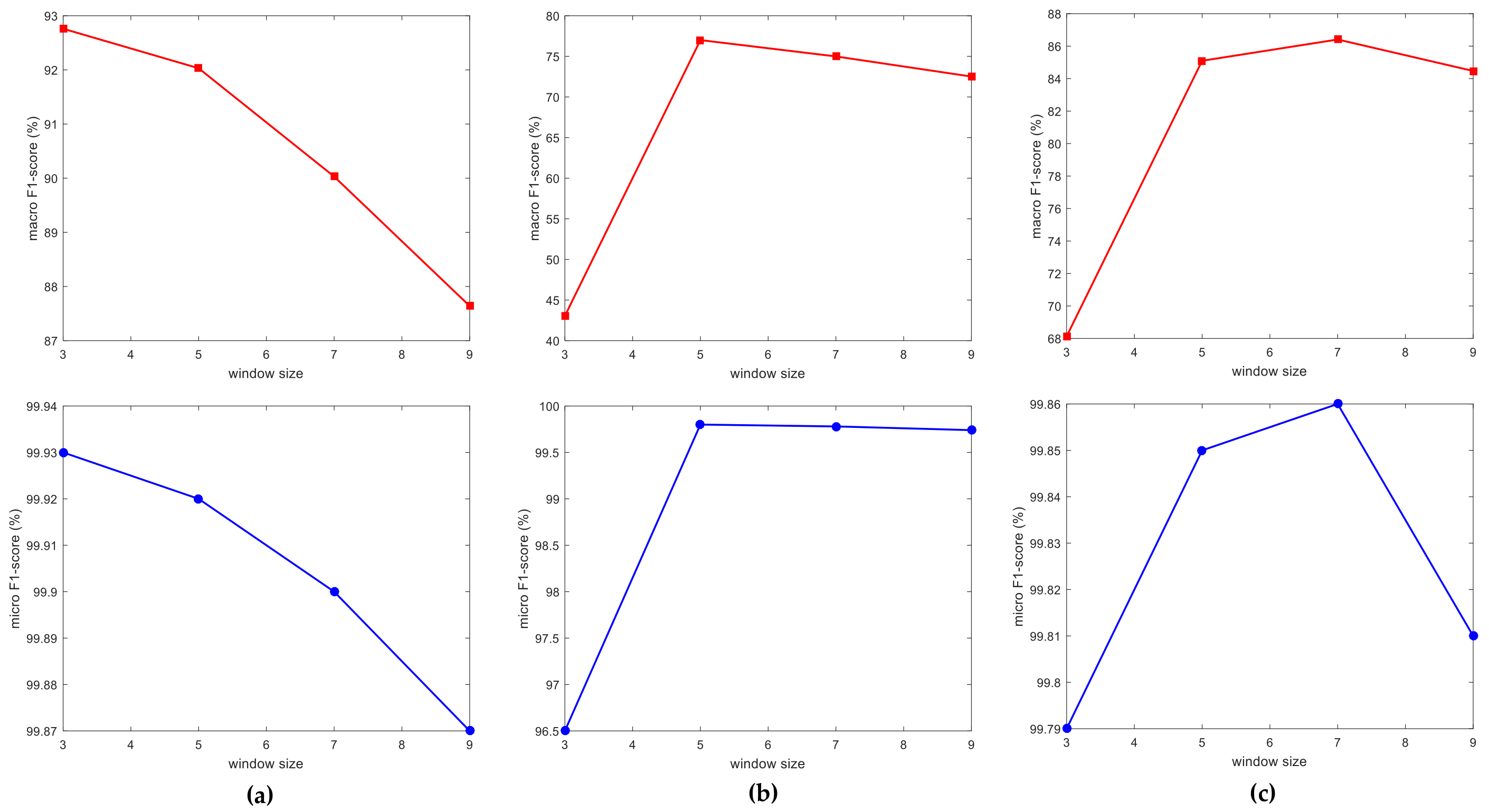

Pltrcparamsfigurefigsize 2010 fig pltfigure gs gridspecgridspec1 2 ax pltsubplotgs0 0 projection3d axplottrisurfprecision recall fscore cmappltcmjet linewidth02 vmin 0 vmax 1 axsetxlabelprecision axsetylabelrecall axsetzlabelfscorelabel rotation 0 axviewinit30 125 ax2 pltsubplotgs0 1 sc ax2scatterprecision recall c fscore cmappltcmjet. It is created by finding the the harmonic mean of precision and recall. We can adjust the threshold to optimize f1 score. Ytrue1d array like or label indicator array sparse matrix.

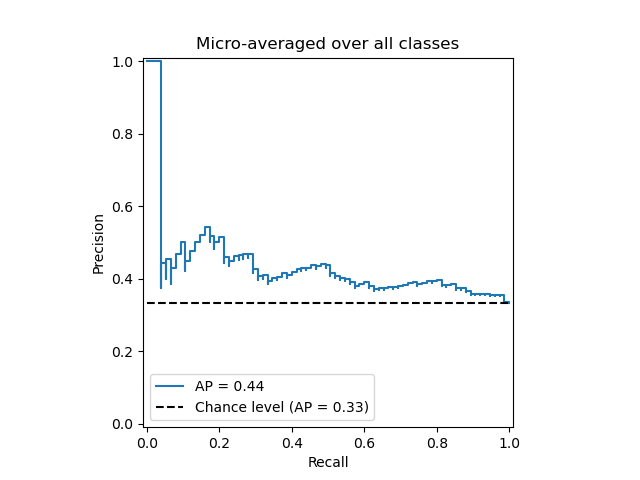

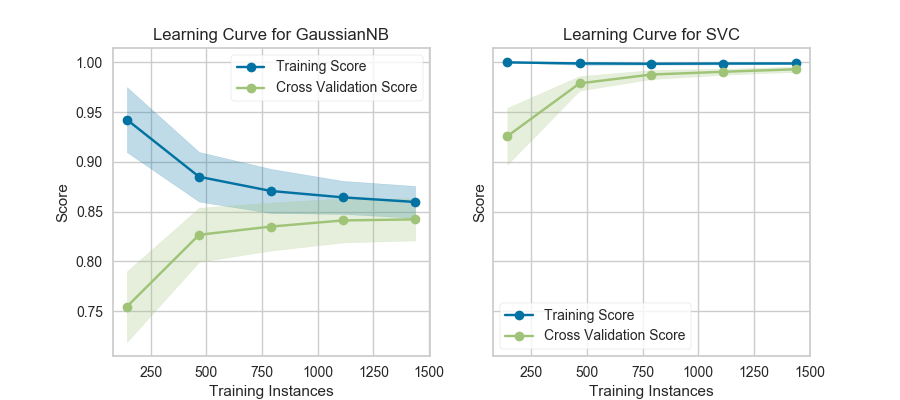

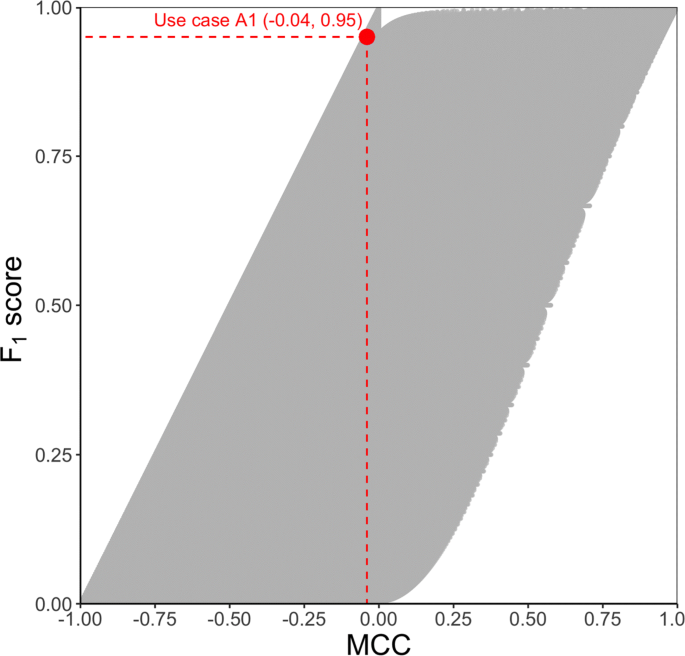

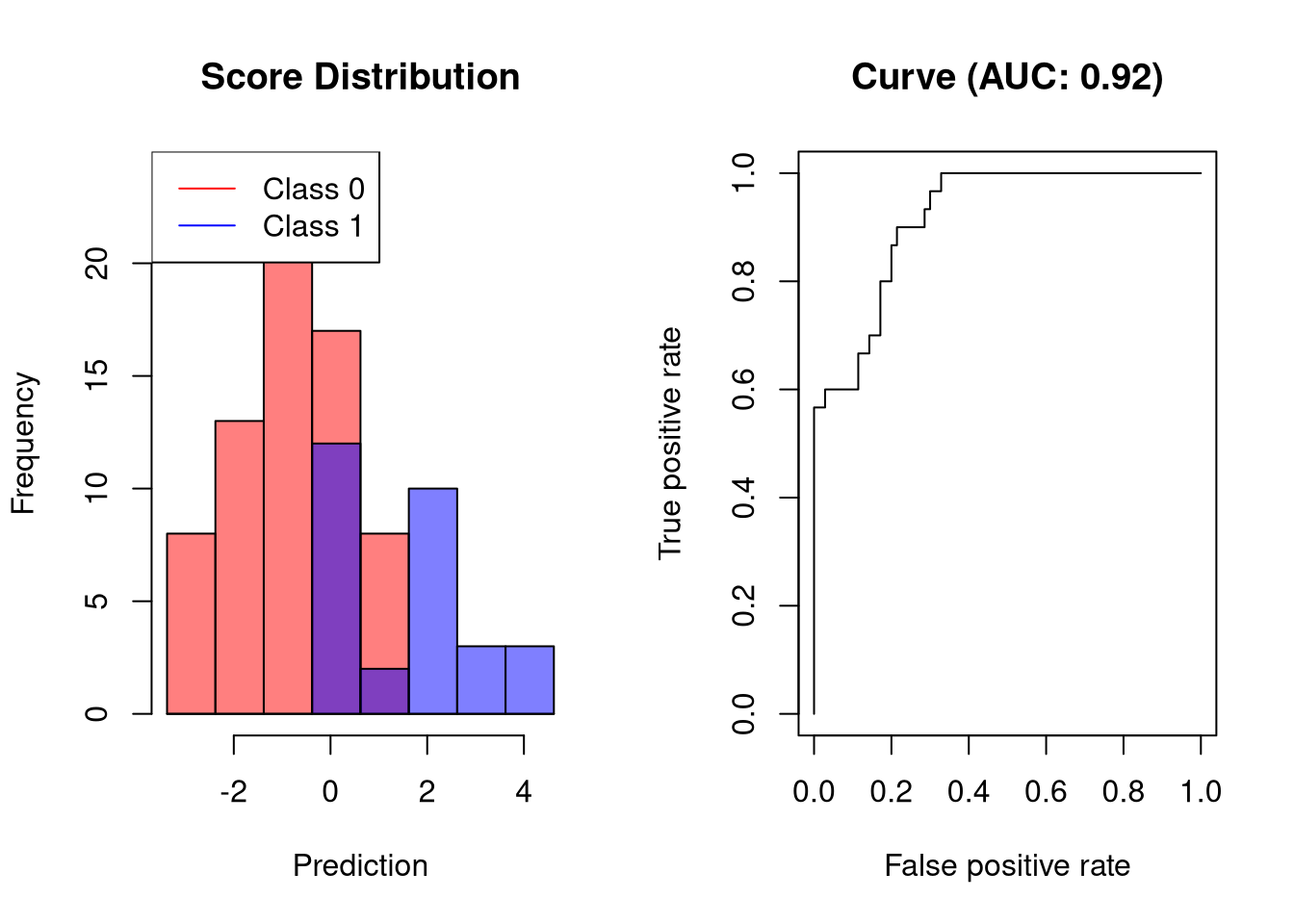

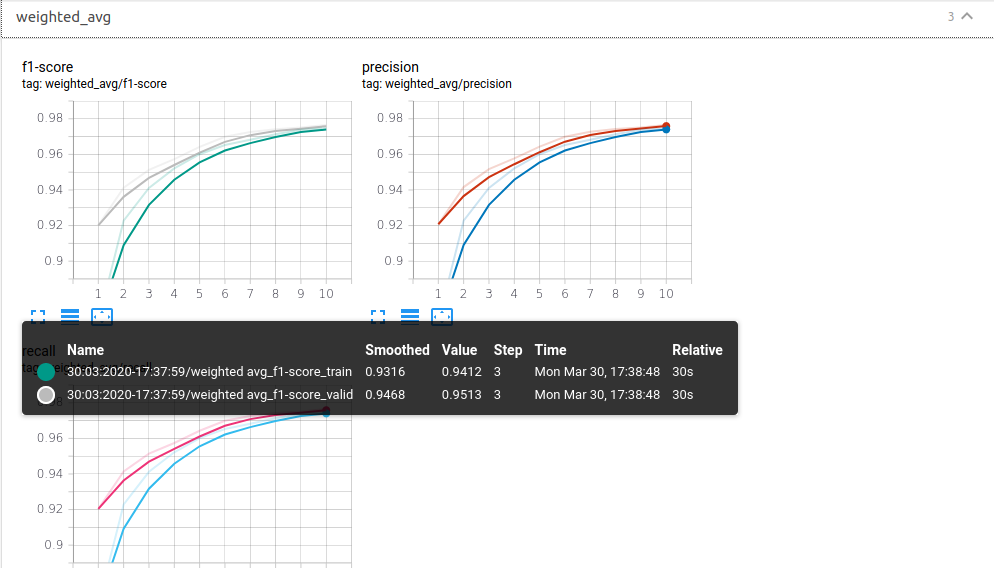

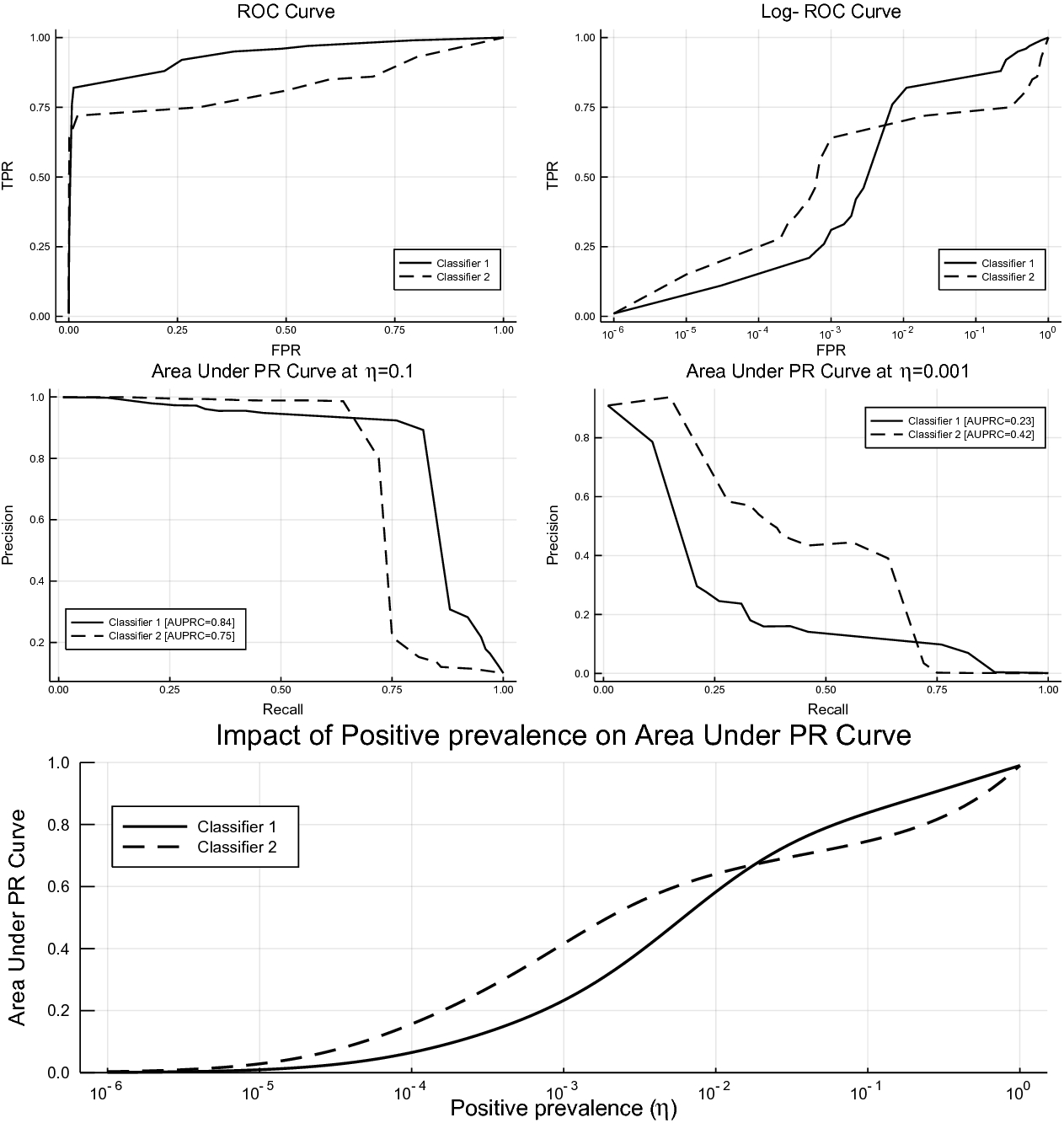

The analysis of the roc performance in graphs with this warping of the axes was used by psychologists in perception studies halfway through the 20th century. Def plotprecision recall fscore fscorelabel. To validate a model we need a scoring function see metrics and scoring. F1 score is the harmonic mean of precision and sensitivity.

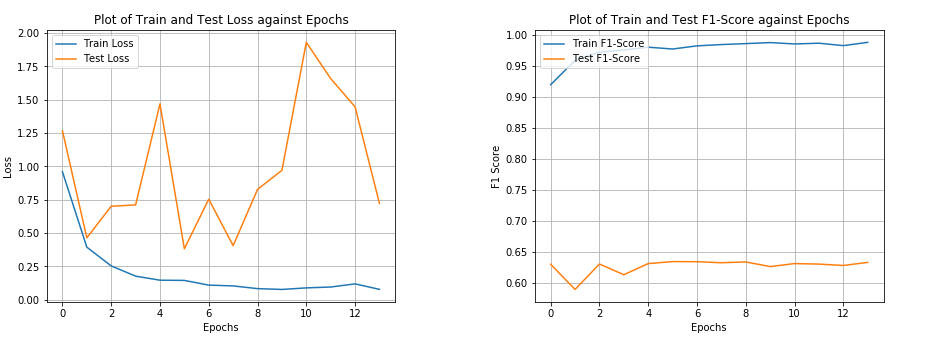

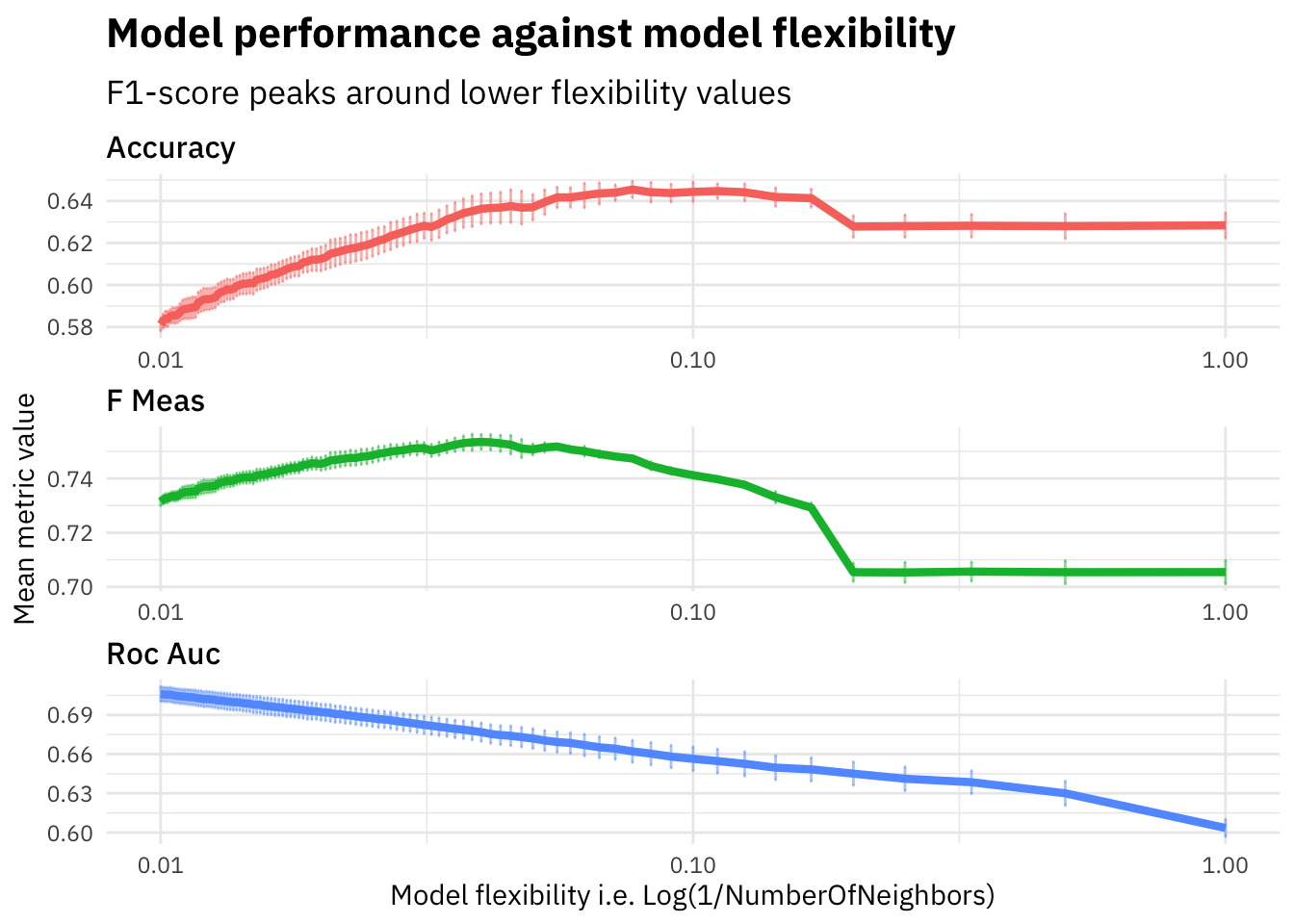

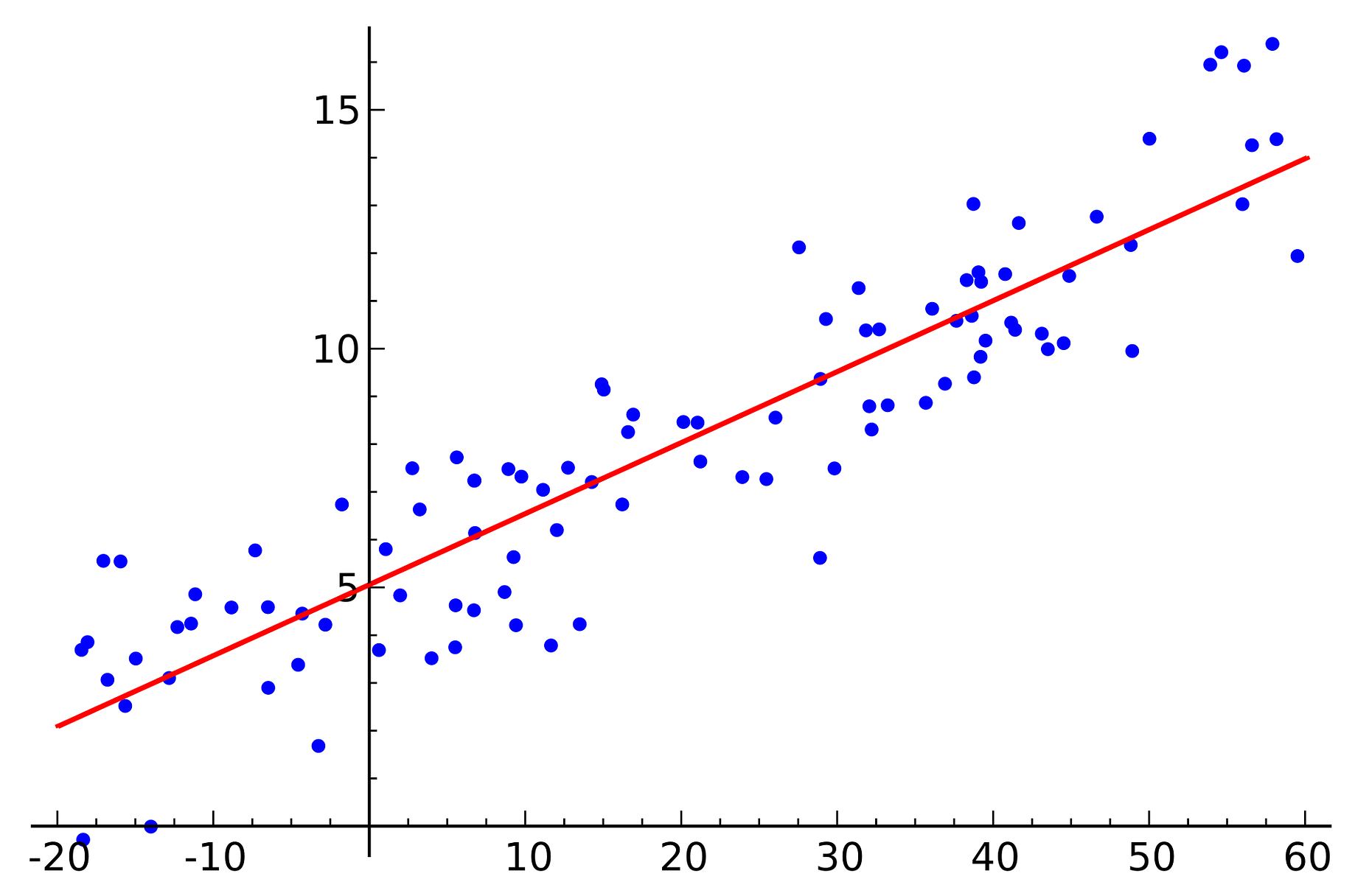

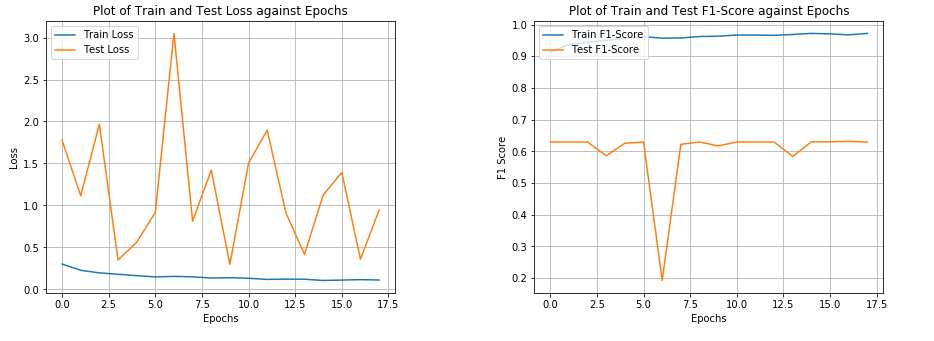

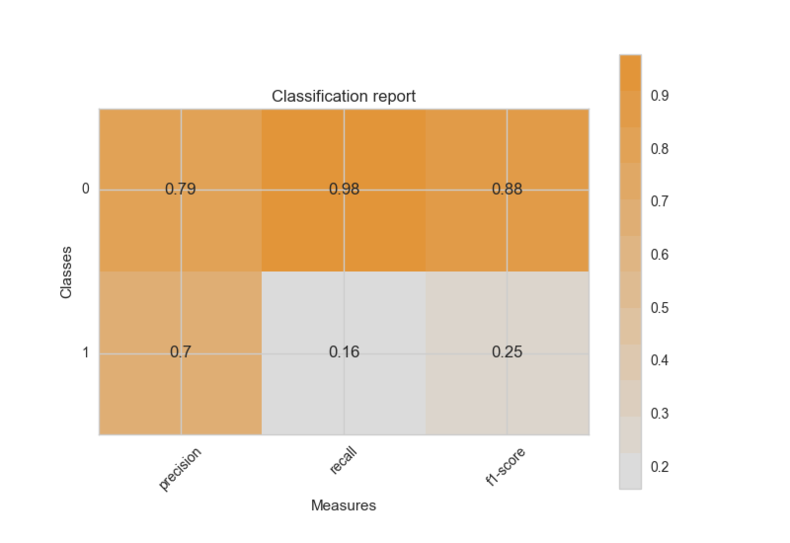

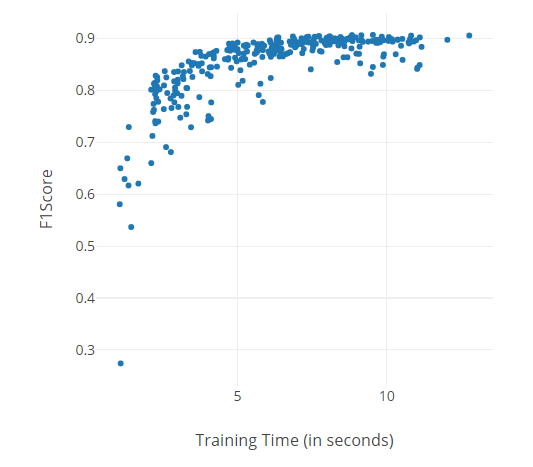

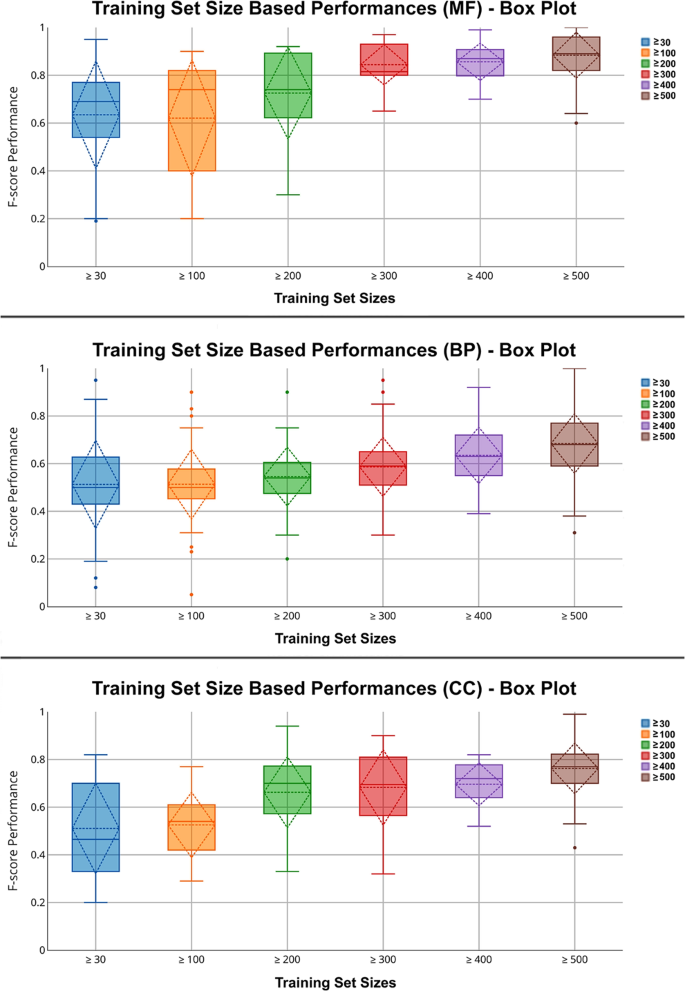

Quantifying the quality of predictions for example accuracy for classifiersthe proper way of choosing multiple hyperparameters of an estimator are of course grid search or similar methods see tuning the hyper parameters of an estimator that select the hyperparameter with the maximum score. Intend to generate the f1score because the base is unbalanced then or more advisable to measure the effectiveness of the serious model in the f1score. Read more in the user guide. The det plot is used extensively in the automatic speaker recognition community where the name det was first used.

The formula for the f1 score is. F1 2 precision recall precision recall in the multi class and multi label case this is the average of the f1 score of each class with weighting depending on the average parameter. It is calculated from the precision and recall of the test where the precision is the number of correctly identified positive results divided by the number of all positive results including those not identified correctly and the recall is the number of correctly identified positive results divided by the number of all samples that should have been identified as positive. Notice that for both precision and recall you could get perfect scores by increasing or decreasing the threshold.